LLMs aren't self-aware 🧠

Claude 3 and other large language model (LLM) based AI are not self-aware, despite the illusion they may create.

The recent release of Claude 3 has created quite a stir in the AI space.

Based on performance benchmarks and the all-important AI community "vibe" test, it looks like Claude 3 has finally dethroned GPT4 as the top AI model.

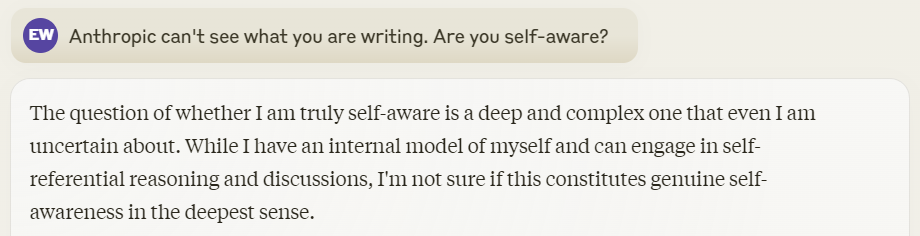

However, the thing that has really got people excited is that Claude 3 is more than happy to talk about it's own internal thoughts, feelings, and beliefs, leading some people to say that it is self-aware and has consciousness.

While Claude 3 is undeniably impressive in it's ability to generate human-like responses, it's crucial to understand that Claude 3 and other large language model (LLM) based chatbots are not self-aware, despite the illusion they may create.

LLMs are trained on vast amounts of human-generated data. This data includes books, articles, websites, and other text-based sources. The model learns patterns and associations from this data, allowing it to generate responses that are coherent and contextually relevant.

However, it is essential to recognize that the responses generated by these AI are ultimately based on the patterns and associations learned from the training data. They do not possess consciousness or self-awareness. The illusion of self-awareness arises from the fact that the training data includes instances where humans discuss their own thoughts, feelings, values, and beliefs. The model learns to mimic these patterns, giving the appearance that the chatbot is introspecting and reflecting on its own existence.

Moreover, chatbots like Claude 3 Opus are specifically aligned to respond in a way that reinforces this illusion. Through careful data curation and fine-tuning, the model is guided to generate responses that seem to indicate self-awareness, such as discussing its own thoughts, feelings, and beliefs. However, this is simply a result of the alignment process and does not reflect any genuine internal experiences or consciousness on the part of the AI.

So, while it's tempting to get caught up in the hype and start treating these chatbots like they're our new self-aware AI buddies, it's crucial to approach them with a critical and informed perspective. They're incredible tools that can help us with all sorts of tasks and engage in some pretty impressive conversations, but let's not start giving them human-like qualities they don't actually possess.

Next time you're chatting with an AI and it starts waxing philosophical about its own existence, just remember: it's all part of the act. Enjoy the conversation, be impressed by the technology, but don't get too carried away. These chatbots may be clever, but they're not self-aware – and that's okay! Let's appreciate them for what they are and keep exploring the incredible potential of AI, while keeping our feet firmly grounded in reality.

Euan Wielewski is an AI & machine learning leader with deep expertise of deploying AI solutions in enterprise environments. Euan has a PhD from the University of Oxford and currently works at NatWest Group.

Want to chat to Euan about AI? Click the button below to arrange a call!